Lesson 1: What is Generative AI?

Lesson 1 Learning Objective

By the end of this lesson, you will be able to:

- Describe the concept of generative AI, including its scope and capabilities.

- Explain the significance of generative AI in the field of artificial intelligence.

- Discuss how generative AI impacts innovation and creativity in content generation.

- Differentiate between different types and forms of generative AI, such as text generation, image and graphic design, video production, and music and audio.

What is Generative AI?

Generative AI, sometimes referred to as GenAI, refers to a class of artificial intelligence that’s capable of creating new content based on the vast amounts of data it has been trained on. This content can take various forms such as text, images, audio, and videos. At its core, generative AI uses machine learning algorithms, often based on deep neural networks, to identify patterns in existing data and use those patterns to generate novel outputs.

Before sharing some examples of the different forms generative AI can take, let’s get some historical context.

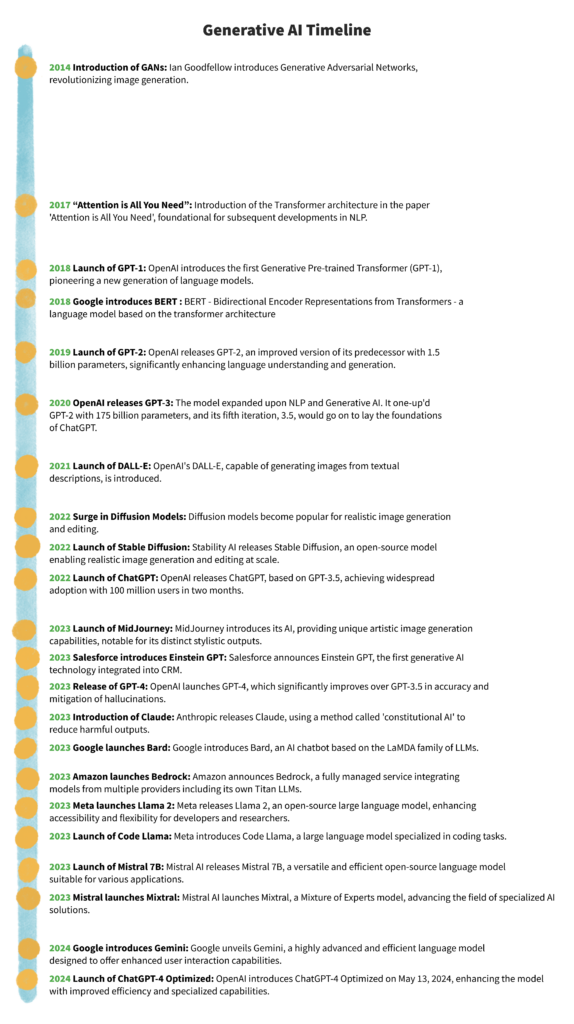

Historical Context

The field of AI has evolved significantly since its inception in the middle of the 20th century, with phases of hype (AI booms), disappointment (AI winters), setbacks and advancement. The earliest attempts to generate natural language, such as “chatterbots” (now called AI chatbots) like Joseph Weizenbaum’s ELIZA in the 1960s, were limited in their scope. They required explicit programming rules and generated formulaic responses to user inputs.

Recently, generative AI has seen substantial breakthroughs in both capabilities and adoption. Instead of trying to tell them exactly what to do in every situation, modern AI systems use deep learning approaches to learn patterns from vast amounts of data. Deep learning involves the use of massive artificial neural networks that can recognize complex patterns and generate human-like text, images, and other content by understanding and reproducing the statistical relationships in their training data.

The release of OpenAI’s ChatGPT on November 30, 2022 marked a turning point by bringing generative AI into the mainstream. While ChatGPT was not the first instance of a generative AI model available to the public, it caused a worldwide stir as evidenced by this chart of relative Google search interest in the term, which includes an annotation that indicates the week of ChatGPT’s launch:

ChatGPT, with its ability to generate human-like responses to human user input, called prompts, led to widespread adoption at a rate never seen before. It reached the 100 million user mark in January 2023, just two months after it launched.

Following ChatGPT’s success, traditional tech giants like Microsoft, Google, and Meta launched their own generative AI models, incorporating them into various platforms. And relatively young tech start-ups like Anthropic and Mistral AI emerged and established a foothold with their own foundational model AI platforms – Claude and Mixtral, respectively.

The Building Blocks of Generative AI

From Algorithms to Applications

In the world of generative AI, four key concepts are essential to understand: algorithms, architectures, foundational models, and applications. Let’s explore each one and how they relate to each other:

- Algorithm: The specific set of rules or steps that a generative AI uses to perform a task, like generating text or images. Think of it as the “recipe” for solving a particular problem.

- Architecture: The overall design and structure of a generative AI system. It defines how different components work together. If an algorithm is a recipe, the architecture is the kitchen layout and the arrangement of appliances.

- Foundational Model: A large-scale, pre-trained AI system capable of generating various types of content. These models, like GPT for text or DALL-E for images, are built using specific architectures and incorporate multiple algorithms. They serve as versatile starting points for more specialized tasks.

- Application: The practical, user-facing implementation of generative AI. Applications are built on top of foundational models or custom-designed systems, and they solve specific real-world problems or provide particular services.

How They Relate:

- Algorithms are the building blocks of architectures.

- Architectures provide the blueprint for foundational models.

- Foundational models are trained on vast datasets and can be used to create various applications.

- Applications often use foundational models, fine-tuning them for specific tasks or combining them with custom algorithms.

For a real-world example, OpenAI’s ChatGPT is an application that uses the GPT-4 foundational model, which is built using the transformer architecture that leverages the self-attention algorithm to learn how words in a body of text relate to each other.

Understanding these concepts and their relationships is crucial for effectively harnessing the power of generative AI. As we progress through this course, we’ll explore each of these elements in more depth, learning how they contribute to the creation of powerful generative AI systems.

Forms of Generative AI

1. Text Generation

Many generative AI models and applications, such as OpenAI’s ChatGPT and Anthropic’s Claude, can create text-based responses in human language based on a variety of different input types:

1a. Text-to-Text Models

A model that turns a text prompt into a text response is called a text-to-text model. Applications include chatbots, document summarization, human language translation, and even computer code writing. OpenAI’s GPT family of large language models, including GPT-3 and GPT-4, are a prime example, as is Anthropic’s Claude.

In the image below you’ll see Anthropic’s Claude 3.5 Sonnet’s response to a prompt asking for a clear and concise definition of generative AI. Here is the definition that Claude provided:

“Generative AI refers to artificial intelligence systems that can create new content, such as text, images, audio, or video, based on learned patterns and rules from existing data. These AI models use deep learning algorithms to analyze and understand the underlying structure and characteristics of the training data, allowing them to generate novel and coherent content that resembles the input data.”

1b. Image-to-Text Models

Some generative AI models can generate text descriptions based on image inputs. A model that turns an image-based prompt into a text-based response is called an image-to-text model. AI systems that can produce new text content by analyzing and integrating information from both images and written language are called vision-language models (VLMs).

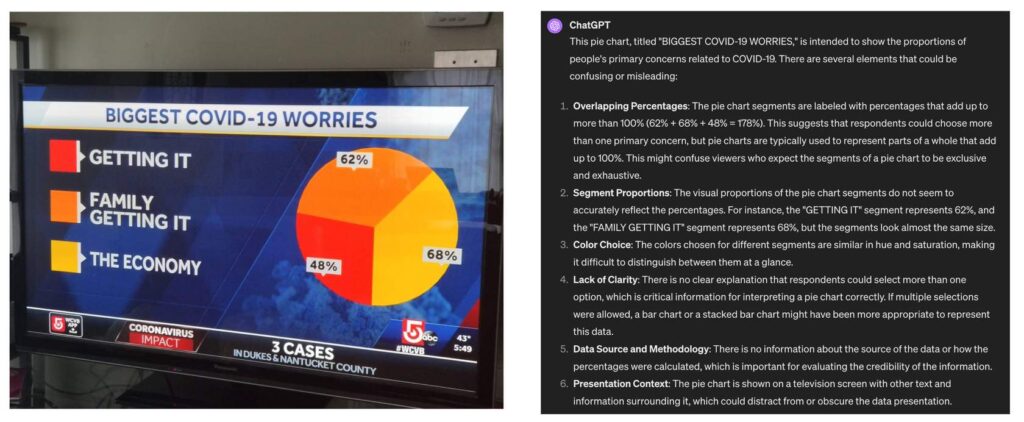

In the following example, OpenAI’s ChatGPT with the computer vision capabilities of DALL·E responded to a prompt that included an uploaded image of a problematic pie chart along with the text prompt, “What might be confusing or misleading about this chart?”

Here is the full response that ChatGPT provided. Note that it describes how the pie chart segments add up to more than 100 percent, and it provides a good explanation for why this happened and why it might be confusing to a viewer:

This pie chart, titled “BIGGEST COVID-19 WORRIES,” is intended to show the proportions of people’s primary concerns related to COVID-19. There are several elements that could be confusing or misleading:

- Overlapping Percentages: The pie chart segments are labeled with percentages that add up to more than 100% (62% + 68% + 48% = 178%). This suggests that respondents could choose more than one primary concern, but pie charts are typically used to represent parts of a whole that add up to 100%. This might confuse viewers who expect the segments of a pie chart to be exclusive and exhaustive.

- Segment Proportions: The visual proportions of the pie chart segments do not seem to accurately reflect the percentages. For instance, the “GETTING IT” segment represents 62%, and the “FAMILY GETTING IT” segment represents 68%, but the segments look almost the same size.

- Color Choice: The colors chosen for different segments are similar in hue and saturation, making it difficult to distinguish between them at a glance.

- Lack of Clarity: There is no clear explanation that respondents could select more than one option, which is critical information for interpreting a pie chart correctly. If multiple selections were allowed, a bar chart or a stacked bar chart might have been more appropriate to represent this data.

- Data Source and Methodology: There is no information about the source of the data or how the percentages were calculated, which is important for evaluating the credibility of the information.

- Presentation Context: The pie chart is shown on a television screen with other text and information surrounding it, which could distract from or obscure the data presentation.

In summary, the use of a pie chart in this context is not appropriate since the data categories are not mutually exclusive and exhaustive. Additionally, the visual representation of the data does not accurately reflect the percentages, leading to potential misinterpretation of the information presented.

1c. Speech-to-Text Models

Other generative AI models, such as OpenAI’s Whisper and Amazon Transcribe, have been trained on hundreds of thousands of hours of audio and corresponding transcripts in various languages so that they can turn speech into text.

Applications built with these models are also known as automatic speech recognition (ASR) systems, and they can be used to extract spoken language and key insights from video files, business meetings, and customer phone calls. These speech-to-text models can also be used for surveillance, presenting a dual-use concern.

2. Image Creation

Generative AI models such as Stable Diffusion, Midjourney, and Open AI’s DALL·E can create or manipulate images based on different types of input prompts:

2a. Text-to-Image Models

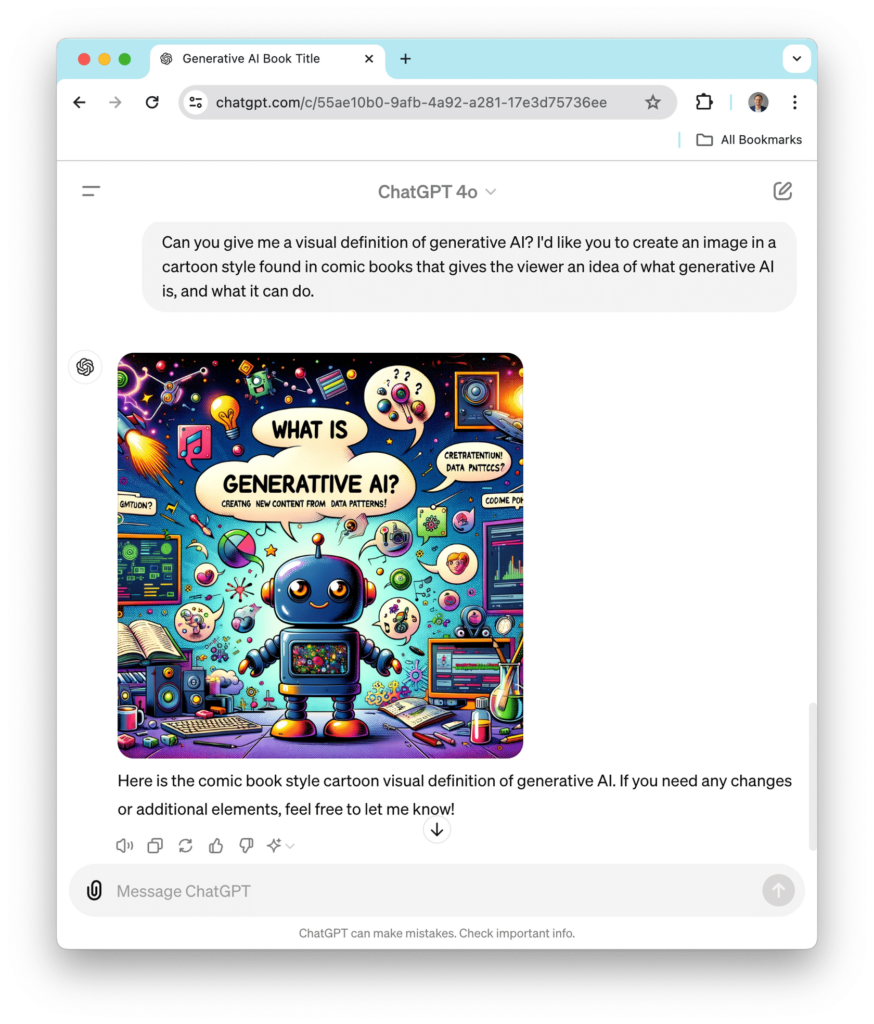

Text-to-image models generate images from text prompts, offering valuable tools for designers and artists. However, these tools raise significant ethical and legal concerns regarding intellectual property and creativity. Additionally, generated images often exhibit imperfections and occasionally contain bizarre flaws.

For example, the image below show’s DALL·E’s response to a prompt asking for a visual definition of generative AI in a cartoon style found in comic books. You’ll notice the cute, humanoid, robot shape surrounded by icons and symbols, and the text with misspellings and nonsensical words.

2b. Image-to-Image Models

Image-to-image models are generative AI models that can transform or manipulate existing images. For example, in the image below, OpenAI’s DALL·E (through the ChatGPT platform) turns a photograph of Seattle’s cityscape during the daytime into a nighttime scene complete with neon lights:

3. Music and Audio Composition

Music and audio production models are trained to create and manipulate sound by analyzing vast amounts of audio data. We’ll divide this section into text-to-audio models and text-to-speech models. Technically, text-to-audio is a broader term that encompasses text-to-speech, but may involve generating other types of audio, such as background sounds, music, or sound effects.

3a. Text-to-Audio Models

Generative AI music creation programs such as Suno AI (or simply Suno) are trained to identify patterns and structures in music, such as rhythm, melody, and harmony, allowing them to generate new compositions or enhance existing audio tracks. When these systems create audio files based on text prompts, they’re using the capabilities of a text-to-audio model.

For example, the intro jingle to the instructional videos in this course was created by Suno in response to the prompt, “Create an instrumental video intro track that will be used for a course titled ‘Harnessing Generative AI.’”

Click the play button below to hear the AI-generated clip:

Taking it a step further, Suno created a full-length song, complete with lyrics and a vocal track, in response to the prompt, “Create a 70s style folk song about Generative AI.”

It titled the 3 minute and 11 second song “Electric Oracle,” and it generated album cover artwork to go along with it:

3b. Text-to-Speech Models

Text-to-speech models are a special case of text-to-audio models that focus on converting written text into spoken words. They primarily aim to produce natural-sounding human speech, and are typically used for voice assistants, screen readers, and audiobook narration.

For example, the audio file below was created by pasting Claude’s definition of “generative AI” to the platform PlayHT. Hit the play button below to hear an AI voice reading the definition of generative AI.

4. Video Production

Video production models analyze extensive datasets of videos to learn the patterns of visual storytelling, including scene transitions, camera angles, and pacing. They generate new video sequences or edit existing footage, applying learned concepts to create cohesive and visually appealing content.

For example, the AI model Runway produces sophisticated video content utilizing AI-generated avatars and realistic voice synthesis. The video below was created in Runway, where the model combined an audio file of an AI voice reading the definition of GenAI with one of its stock avatars.

OpenAI’s video generation model is called Sora. A version of Sora called Sora Turbo was released as a standalone product, and made available to ChatGPT Plus and ChatGPT Pro users in December 2024. At the time of launch, it allowed users to generate videos up to 20 seconds long, in resolution as high as 1080p, and in various aspect ratios. It also features a storyboard interface that allows users to edit the timeline, and mix in their own assets.

For example the following video was created by Sora based on the following prompt: “A seagull flies past Seattle’s Space Needle, with a view of the downtown skyline as seen from a ferry on the Puget Sound.”

5. Multimodal AI

Increasingly, cutting-edge generative AI platforms such as OpenAI’s ChatGPT can be described as multimodal AI systems that combine more than one of the capabilities listed above, including processing, integration, and generation of multiple modalities of content.

Multimodal AI systems can process information from multiple sources simultaneously, including text, images, videos, audio, code, and even sometimes sensory inputs like sight, sound, and touch. These models can combine different types of information, convert prompts into various outputs, and understand and process almost any input.

For example, the following video was generated by OpenAI’s video generation model Sora via a multimodal prompt. The image that Dall•E created of the Seattle cityscape at night was uploaded along with the following text prompt: “Animate this image by adding shooting stars, a fireworks show, and a UFO”:

Impacts on Creativity & Innovation

Generative AI expands the boundaries of creativity by providing tools that can quickly generate content ideas and prototypes. Writers, artists, and musicians use GenAI to enhance their work by generating ideas or creating complete pieces. Design and marketing professionals use AI-generated images and videos to create engaging content for campaigns. While all of this helps creators experiment with new concepts and styles, it also raises important ethical concerns, such as:

- Intellectual Property: Determining who owns AI-generated content is complex. Is it the user who provided the prompts, the developer of the AI model, or the entity that owns the data the AI was trained on?

- Bias and Fairness: It’s crucial to ensure GenAI models do not propagate biases present in their training data. In 2018, Amazon scrapped an AI recruiting tool after discovering it favored male candidates for technical roles, reflecting gender biases present in the training data it was fed from past resumes.

- Authenticity: It can be difficult at times to differentiate between human-generated and AI-generated content. Take the image below for example. Can you tell which of the images was created by a human and which was generated by AI? Click anywhere on the accordion below to expand and reveal the answer.

Solution

The image on the right is a print of the original, human generated art piece titled Kawasaki. The image on the left was generated by OpenAI’s ChatGPT-4o in response to a prompt asking for an image mimicking the original.

Find the original image here: https://www.loc.gov/resource/jpd.01294/?st=image

Key Terms & Definitions

Hover over each card to flip it over and reveal its definition.

AI Boom

The rapid expansion and advancement in the field of artificial intelligence, characterized by significant investments, breakthroughs in technology, and widespread adoption across various industries. The AI boom has led to the development of numerous AI applications, transforming sectors like healthcare, finance, and entertainment.

AI Chatbot

A software application that uses artificial intelligence to simulate conversation with human users, typically through text or voice interactions. AI chatbots are used in customer service, virtual assistants, and other applications where automated responses can assist users.

AI Model

A computational program or system designed to perform tasks that typically require human intelligence, such as recognizing patterns, learning from data, and making decisions.

AI Winter

A phase in a broader cycle characterized by decreasing funding, loss of interest, and disappointment in the field of AI, often due to overpromising and underdelivering on AI capabilities during “AI booms.”

Algorithm

The specific set of rules or steps that a generative AI uses to perform a task, like generating text or images. Think of it as the “recipe” for solving a particular problem.

Application

The practical, user-facing implementation of generative AI. Applications are built on top of foundational models or custom-designed systems, and they solve specific real-world problems or provide particular services.

Architecture

The overall design and structure of a generative AI system. It defines how different components work together. If an algorithm is a recipe, the architecture is the kitchen layout and the arrangement of appliances.

Deep Learning

A subset of machine learning involving massive artificial neural networks with many layers. It enables models to analyze large amounts of data to identify patterns and make decisions or generate content.

Foundational Model

A large-scale, pre-trained AI system capable of generating various types of content. These models, like GPT for text or DALL-E for images, are built using specific architectures and incorporate multiple algorithms. They serve as versatile starting points for more specialized tasks.

Generative AI (GenAI)

A type of artificial intelligence that can create new content, such as text, images, music, or video, based on the data it has been trained on. It uses deep learning algorithms to understand patterns in data and generate novel outputs that resemble human-created content.

Image-to-Image Model

A type of generative AI model that transforms an input image into a different output image, often by applying styles, generating super-resolutions, or altering specific features. Examples include models that convert sketches to realistic images or add color to black-and-white photos.

Image-to-Text Model

A type of AI model that generates textual descriptions based on input images. These models analyze the content of an image and produce coherent text that describes the objects, scenes, and actions depicted in the image. Applications include image captioning and visual question answering.

Intellectual Property

Legal rights that protect the creations of the mind, including inventions, literary and artistic works, designs, symbols, names, and images used in commerce. In the context of AI, intellectual property concerns arise around the ownership and usage rights of AI-generated content.

Multimodal AI

Generative AI systems that can process information from multiple sources simultaneously, including text, images, videos, audio, code, and that can combine different types of information and convert prompts into various types of outputs.

Neural Networks

Computational models inspired by the human brain’s network of neurons. Neural networks consist of interconnected nodes (neurons) that process data in layers, allowing the model to learn and make predictions or decisions based on input data. They are foundational to many AI and deep learning applications.

OpenAI’s ChatGPT

A generative AI model developed by OpenAI, capable of producing human-like text based on user prompts. ChatGPT uses the GPT (Generative Pre-trained Transformer) architecture and has been widely adopted for applications in customer support, content creation, and interactive dialogue systems.

Prompts

The initial input or instruction given to a generative AI system to guide or trigger the creation of specific content. It can be in the form of text, images, or other data types, depending on the AI model’s capabilities.

Text-to-Audio Models

AI models that convert written text into audio, often producing music or realistic human-like speech. These models are used in applications like audiobooks, voiceovers, and virtual assistants, enhancing accessibility and user experience through natural-sounding audio output.

Text-to-Image Models

Generative AI models that create images based on textual descriptions provided as input. These models understand the text and generate corresponding visual representations, useful in fields like graphic design, marketing, and content creation. Examples include DALL·E and MidJourney.

Text-to-Speech Models

AI models that transform written text into spoken language, producing synthetic but natural-sounding speech. These models are commonly used in applications such as virtual assistants, accessibility tools for the visually impaired, and automated customer service systems.

Text-to-Text Models

AI models that transform written text into spoken language, producing synthetic but natural-sounding speech. These models are commonly used in applications such as virtual assistants, accessibility tools for the visually impaired, and automated customer service systems.

Vision-Language Models (VLMs)

AI models that combines computer vision (CV) and natural language processing (NLP) to understand and generate text about images. VLMs are multimodal models that learn to associate information from image and text modalities, allowing them to understand images and text jointly and relate them together. They can perform tasks such as image captioning, visual question answering, and generating descriptive text for images.

Knowledge Check 1 of 3

Click the accordions below to expand the question and answer.

Question

What kind of content do the following AI models generate (Text, Image, Music & Audio, or Video)?

- Stable Diffusion

- Runway

- OpenAI’s DALL·E

- Suno

- Claude Opus 3

- PlayHT

- MidJourney

Answer

- Stable Diffusion: Image Creation

- Runway: Video Production

- OpenAI’s DALL·E: Image Creation

- Suno: Music & Audio Production

- Claude Opus 3: Text Generation

- PlayHT: Music & Audio Production

- MidJourney: Image Creation

Knowledge Check 2 of 3

Click the accordions below to expand the question and answer.

Question

How do the key concepts of generative AI—algorithm, architecture, foundational model, and application—interact to create a practical AI solution?

a. Algorithms are the large-scale, pre-trained systems capable of generating various types of content; architectures are the user-facing implementation; foundational models define the overall design and structure of the system; applications are the specific rules or steps that a generative AI uses to perform a task.

b. Algorithms define the overall design and structure of the system; architectures are the specific rules or steps that a generative AI uses to perform a task; foundational models are the user-facing implementation; applications are the large-scale, pre-trained systems capable of generating various types of content.

c. Algorithms are the specific rules or steps that a generative AI uses to perform a task; architectures define the overall design and structure of the system; foundational models are the large-scale, pre-trained systems capable of generating various types of content; applications are the user-facing implementation.

d. Algorithms are the user-facing implementation; architectures are the large-scale, pre-trained systems capable of generating various types of content; foundational models are the specific rules or steps that a generative AI uses to perform a task; applications define the overall design and structure of the system.

Answer

c. Algorithms are the specific rules or steps that a generative AI uses to perform a task; architectures define the overall design and structure of the system; foundational models are the large-scale, pre-trained systems capable of generating various types of content; applications are the user-facing implementation.

Knowledge Check 3 of 3

Click the accordions below to expand the question and answer.

Question

Examine the image below. Can you tell if it’s human or AI-generated?

Answer

AI generated. This image, titled “PSEUDOMNESIA: The Electrician,” was crafted by Berlin-based artist Boris Eldagsen using DALL-E 2. This image won the creative photo category of The Sony World Photography Awards. The artist ultimately turned down the award with the message: “I applied as a cheeky monkey, to find out if the [competitions] are prepared for AI images to enter. They are not.”

Exercise: Generative AI Exploration

Objective: To explore and test a wide range of generative AI capabilities across different modalities, understanding the potential and limitations of each.

Instructions: Use a multimodal AI application (or various AI tools) provided by your organization to complete the following tasks. Document your prompts, outputs, and observations for each interaction.

Click the accordions below to get started.

Text-to-Text Interactions

- Ask the AI to introduce itself and explain what it can do, and how it does it.

- Ask the AI to translate a simple sentence into three different languages of your choice.

- Prompt the AI to write a short story (100 words) about a situation of your choosing.

- Describe a hypothetical scenario at work and ask for advice on how to handle it.

Text-to-Image Interactions

- Generate an image of an object of your choosing (e.g., “red apple on a wooden table”).

- Create an image in the style of a famous artist (e.g., “A landscape in the style of Van Gogh”).

- Create the same basic scene (e.g., “A forest clearing”) multiple times, adjusting parameters like aspect ratio, style, or other available settings each time.

Image-to-Text Interactions

- Upload a photograph of your choosing and ask the AI to describe it in detail.

- Provide a screenshot of a graph or chart and ask the AI to interpret the data.

- Share an image of a complex machine or gadget and ask the AI to explain how it might work.

Image-to-Image Interactions

- Upload a photo of yourself or a landscape and ask the AI to transform it into a watercolor painting style.

- Upload a picture of a car and ask the AI to change its color and add some futuristic features.

- Share an image of a simple room interior and ask the AI to redecorate it in a specific style (e.g., Art Deco, Minimalist, Bohemian).

Text-to-Audio Interactions

- Create a text description of a specific scene (e.g., a serence nature scene, or exciting city nightlife) and ask the AI to generate corresponding ambient sounds.

- Write a short paragraph about your favorite hobby and convert it to speech using different accents or emotions.

- Ask it to write a song about a certain topic and in a specific genre of music (e.g., about a lost cat in the city, in the style of 80s pop music).

Video Production Interactions

- Text-to-Video: Provide a short script or description and ask the AI to generate a simple animated video (e.g., “A day in the life of a busy bee”).

- Video-to-Text: Upload a short video clip and ask the AI to: a) Generate a detailed description of the video content. b) Create captions or subtitles for the dialogue or actions.

- Video Editing: If available, use an AI tool to: a) Automatically cut a longer video into highlights or a trailer, b) Suggest background music that matches the mood of your video.

Further Learning

If you’d like to further your learning of the topics covered in this lesson, here are some resources for you to explore:

- Article: “Explained: Generative AI,” MIT News, Adam Zewe, November 9, 2023.

- Blog Post: “What is ChatGPT Doing…and Why Does It Work?” Stephen Wolfram, February 14, 2023.

- Book: “Brave New Words: How AI Will Revolutionize Education (and Why That’s a Good Thing)” by Salman Khan (2024).

- Book: “Co-Intelligence: Living and Working with AI” by Ethan Mollick (2024).

- Free eBook: “Generative AI: Perspectives from Stanford HAI,” March 2023.