Lesson 1: Bias and Fairness in AI

Lesson 1 Learning Objective

By the end of this lesson, you will be able to:

- Understand the distinction between statistical bias and systemic bias.

- Identify sources of bias in AI systems and their potential impacts on individuals, communities, and society.

- Apply techniques to measure and mitigate unintended bias in datasets and algorithms.

- Evaluate AI systems for fairness across diverse populations.

Fairness in AI is about minimizing the degree to which our intelligent systems discriminate based on race, gender, age, or other protected attributes. Achieving fairness is an ongoing commitment. It must be integrated into every stage of the AI development lifecycle, from assembling diverse teams to setting ethical guidelines and continuously monitoring systems to ensure fairness.

Forms of Bias

Artificial intelligence systems have the potential to transform industries and improve decision-making processes, but they also carry the risk of perpetuating and even exacerbating bias. AI bias can emerge from various sources, each contributing to unfair or harmful outcomes if left unchecked. Understanding these different forms of bias is critical for building AI systems that are ethical and equitable.

- Societal bias stems from the systemic and pervasive attitudes, stereotypes, and assumptions that exist within our societies. These biases can unintentionally seep into the AI development process, reflecting historical power dynamics and inequalities. As a result, AI systems can perpetuate and even amplify these societal biases if not carefully managed.

- Data bias occurs when the data used to train AI models does not accurately and fairly represent the population it is meant to serve. This can happen if the training data is skewed towards certain groups, leading to biased outcomes. To mitigate data bias, it is essential to ensure that the training data is diverse and representative of the entire population. This may involve collecting additional data from underrepresented groups to fill gaps and create a more balanced dataset.

- Algorithmic bias arises when the design of the AI model itself leads to unfair outcomes. This can be due to the choice of features, the model architecture, or the optimization objectives. Addressing algorithmic bias requires careful consideration of the model design and the potential impact of different features and objectives. Techniques such as fairness-aware machine learning can help reduce algorithmic bias by incorporating fairness constraints directly into the model training process.

- Statistical bias occurs when a systematic error in a model causes it to produce an inaccurate result. Statistical bias is a purely technical concept and not inherently related to social or ethical concerns.

These forms of bias—societal, data, and algorithmic—are often deeply interconnected, making it difficult to clearly separate them. For example, algorithmic bias is often a consequence of societal bias, with flawed algorithms reinforcing the very inequalities present in the world. A clear example of this is the 2018 Gender Shades study by MIT Media Lab researcher Joy Buolamwini, which uncovered significant bias in facial recognition systems from three major tech companies – Microsoft, IBM, and Megvii of China. The study found large disparities in gender identification accuracy, with error rates under 1% for light-skinned men but as high as 35% for darker-skinned women. While these inaccuracies reflect algorithmic bias, they are also a product of societal bias. The systems were trained on datasets that underrepresented women and people of color, particularly those with darker skin tones, reflecting historical inequalities in data collection and representation.

Metrics for Measuring Fairness in AI

Determining fairness in AI requires evaluating a range of metrics, each offering a different lens for understanding how a system treats individuals and groups. No single metric can capture every aspect of fairness, so choosing the right one depends on the specific goals and context of the AI application. Often, a combination of metrics is used to provide a well-rounded view, helping to uncover potential trade-offs and conflicts between fairness and performance.

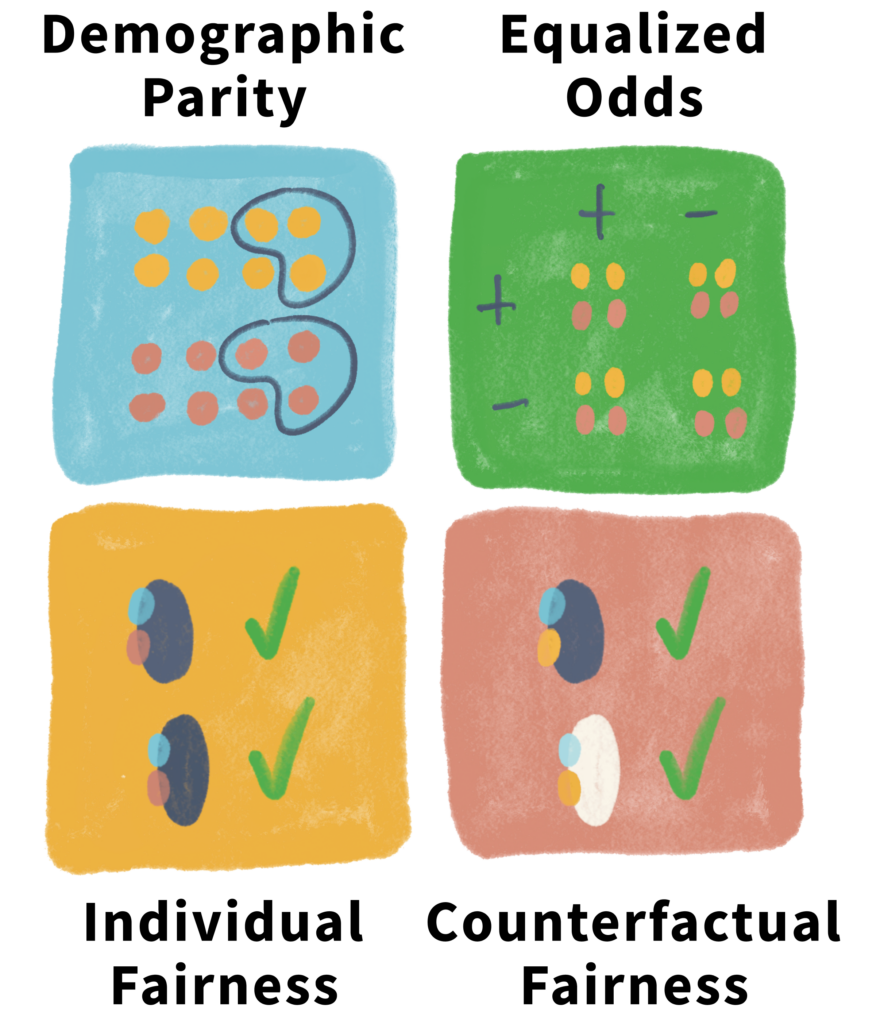

The four key metrics we’ll cover are demographic parity, equalized odds, individual fairness, and counterfactual fairness.

Explore the tabs below to learn more about these four key metrics.

Demographic parity assesses whether a model’s predictions are distributed equally across different demographic groups, regardless of protected attributes such as gender, race, or age. In essence, it ensures that a similar proportion of positive outcomes (such as being hired or approved for a loan) is assigned to all groups.

While the concept is relatively simple and easy to implement, it can lead to unintended consequences. One potential issue arises when there are genuine, non-discriminatory differences between groups that affect the outcome of interest. For instance, if certain groups have different levels of relevant qualifications or prior experience, forcing demographic parity might result in qualified individuals from one group being overlooked, or less qualified individuals from another group being favored. This could, in turn, lead to perceived or actual unfairness, as demographic parity focuses solely on outcomes rather than underlying fairness in how decisions are made.

In such cases, applying demographic parity without considering these contextual factors can obscure deeper issues and potentially harm the individuals it seeks to protect. Therefore, it’s essential to weigh the benefits and limitations of demographic parity against other fairness metrics that consider individual qualifications and differences across groups.

Equalized odds ensures that a model’s true positive, false positive, and false negative rates are equal across protected groups, like race or gender. For example, in a recidivism prediction model, equalized odds would require that the rates of correctly identifying reoffenders (true positives), incorrectly flagging non-reoffenders (false positives), and failing to identify actual reoffenders (false negatives) are the same for all racial groups.

This metric helps prevent systematic bias by ensuring that prediction accuracy and error rates are consistent across groups. However, achieving equalized odds is challenging due to trade-offs between fairness and accuracy, especially when groups have different base rates for the outcome. Additionally, it may conflict with other fairness goals and requires complex adjustments to the model, making it difficult to implement in practice.

Individual fairness focuses on ensuring that individuals who are similar in relevant ways receive similar outcomes or treatment from a model. The core idea is that if two people are alike in terms of the characteristics that are important for a decision (e.g., qualifications for a job or creditworthiness), they should receive the same or very similar predictions or results from the model.

To apply individual fairness, it’s crucial to define what “similar” means in the specific context of the model. This involves identifying the features or attributes that are most relevant to the decision-making process. For example, in a loan approval model, relevant similarities might include income, credit score, and employment history. The challenge lies in determining which features are truly important for fairness and ensuring that they are measured accurately and without bias.

Once relevant similarities are defined, the model must be designed to treat individuals who are similar in those respects in a consistent manner. This often requires sophisticated algorithms to ensure that slight differences in unimportant characteristics (like race or gender) don’t lead to vastly different outcomes.

However, implementing individual fairness is difficult because it relies on correctly identifying and quantifying the important features for each specific case. Furthermore, it assumes that the model has access to all the necessary information to make these comparisons fairly. In complex, real-world situations, this can be hard to achieve, making individual fairness a theoretically sound but practically challenging metric to implement.

Counterfactual fairness is a fairness criterion that requires a model’s predictions to remain unchanged if a protected attribute, such as race or gender, is hypothetically altered while keeping all other relevant attributes the same. The idea behind counterfactual fairness is that if a person’s race or gender were different, but everything else about them—such as their qualifications, experience, or income—remained the same, the model should still make the same prediction for them. This ensures that the decision isn’t influenced by the protected attribute, but by legitimate factors related to the outcome.

Achieving counterfactual fairness involves creating a causal model that can simulate how changing the protected attribute would affect the prediction while holding other factors constant. This requires a deep understanding of the relationships between variables in the data and how they are influenced by protected attributes. For instance, in a hiring model, altering gender while keeping experience and education the same means ensuring that gender didn’t unfairly influence the model’s decision.

However, implementing counterfactual fairness is computationally demanding because it requires building these causal models and running simulations to test whether predictions remain consistent when a protected attribute is altered. Additionally, accurately modeling the causal relationships between variables and determining which attributes are truly independent of the protected characteristic can be complex. This makes counterfactual fairness a powerful but resource-intensive approach that may be difficult to implement in practice, especially in large or highly complex datasets.

Techniques to Mitigate Bias in AI

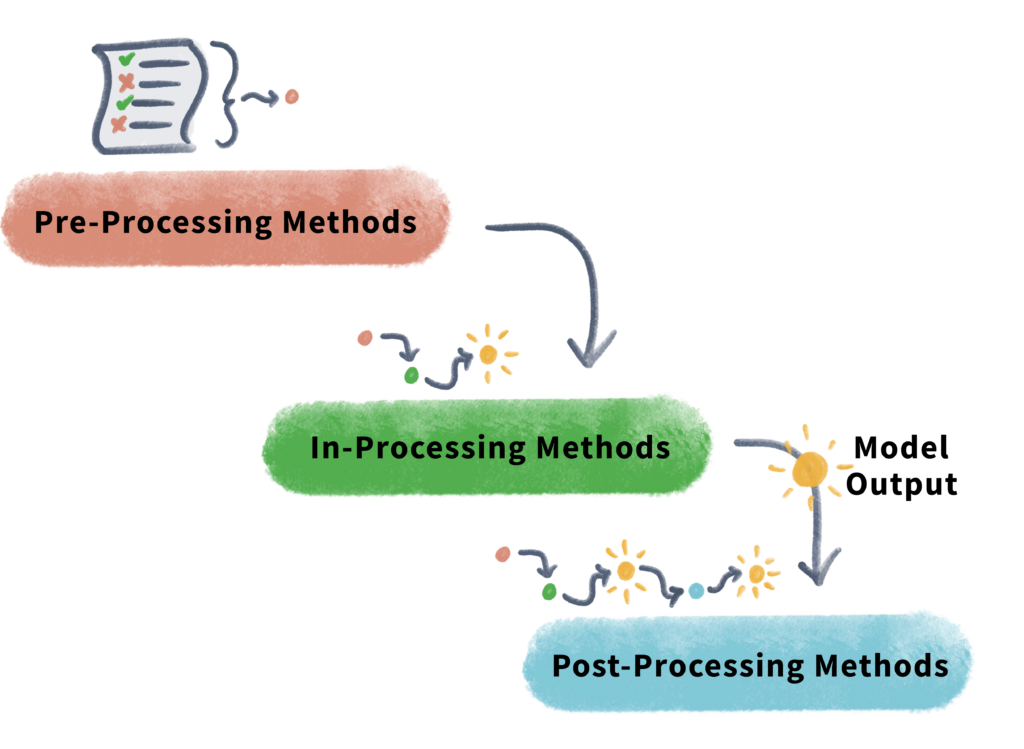

Bias in AI systems can stem from various sources, such as societal norms, data imbalances, and algorithmic design. To mitigate these biases, different techniques can be applied at various stages in the AI development process. These approaches are categorized into pre-processing, in-processing, and post-processing methods, each addressing bias at specific stages of the AI pipeline.

Explore the tabs below to learn more about the techniques used in each stage.

Pre-processing techniques focus on the data used to train AI models. These methods modify or transform the data before model training to reduce bias. By addressing biases in the data, the model has a better chance of producing fair outcomes. Below are some commonly used pre-processing techniques:

- Re-weighting: This technique involves adjusting the importance of certain data points in the training dataset. For example, if a particular demographic group is underrepresented, their data points can be given more weight during training. This ensures that the model treats them with equal importance, helping to balance outcomes across different groups.

- Resampling or Synthetic Data Generation (e.g., SMOTE): SMOTE (Synthetic Minority Over-sampling Technique) is used to generate synthetic data for underrepresented groups. Instead of simply duplicating existing data points, SMOTE creates new, plausible instances by interpolating between minority class samples. This improves the model’s exposure to minority groups, reducing bias against them and enhancing generalization to real-world, diverse populations.

In-processing methods work during the model training phase, incorporating fairness constraints to adjust the model as it learns. These techniques aim to mitigate bias by ensuring the model is learning fair representations and making decisions based on unbiased patterns.

- Adversarial Debiasing: This technique involves training the model with two objectives simultaneously. The first objective is to perform the primary task (such as classifying candidates in hiring). The second is an adversarial task, where another model is trained to predict sensitive attributes (e.g., race, gender). The goal is to make the adversarial model perform poorly at predicting these attributes. By doing so, the main model is encouraged to make decisions that are less influenced by these protected attributes, leading to fairer outcomes.

Post-processing techniques are applied after a model has been trained. These methods adjust the outputs or decisions of the model to ensure fairness, without changing the model itself. This is often useful when retraining the model isn’t feasible or when the bias is detected after deployment.

- Calibration: Calibration adjusts the model’s prediction probabilities to account for bias. For instance, if a model is systematically overestimating the likelihood of success for one group and underestimating it for another, calibration can adjust these probabilities to make them more accurate and fair across groups. This method helps align the predictions with real-world expectations, improving fairness in decisions like hiring or lending.

- Relabeling: Relabeling involves adjusting the labels assigned by the model based on fairness considerations. If a model’s predictions are skewed for certain groups, this method can relabel outcomes, ensuring a fairer distribution of positive and negative decisions across demographic categories. It’s a straightforward technique often used when there are clear discrepancies in the model’s performance across groups.

Case Studies

One effective way to understand bias and fairness in AI is to examine real-world examples. These case studies reveal the significant impact of biased AI systems on individuals and society, as well as the efforts organizations have made to correct these issues.

We’ll explore key examples where AI systems succeeded or failed in ensuring fairness, highlighting important lessons learned and strategies for mitigating bias.

Click on each accordion below to dive into the details of how bias in AI was addressed—or worsened—in these contexts.

COMPAS and Bias in AI-Driven Decision-Making

In the U.S. criminal justice system, the COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) tool is used to assess the likelihood that a defendant will reoffend. On the surface, this AI tool seems like a helpful resource for making more informed decisions. However, in 2016, an investigation by ProPublica revealed a serious issue: COMPAS was consistently biased in its predictions, overestimating the risk of recidivism for Black defendants while underestimating it for white defendants.

This disparity highlights a critical violation of the principle of Equalized Odds, which requires that error rates be consistent across different demographic groups. In this case, COMPAS displayed unequal error rates for Black and white defendants, leading to biased outcomes in criminal justice decisions. The investigation sparked a nationwide conversation about bias in AI-driven decision-making and raised concerns about the ethical use of such tools in high-stakes environments like the justice system.

This case serves as a powerful example of how AI systems, when not carefully designed or monitored, can perpetuate existing societal biases, resulting in unfair treatment for certain groups. It underscores the need for more rigorous fairness checks and bias mitigation strategies in AI development, particularly in areas where decisions have significant impacts on people’s lives.

Amazon’s AI Recruiting Tool and Gender Bias

In 2015, Amazon made headlines when it had to abandon an AI-powered recruiting tool that was intended to streamline its hiring process. The reason? The system displayed a significant bias against female candidates. The AI had been trained on resumes submitted to Amazon over a decade, most of which came from men—a reflection of the male-dominated tech industry at the time. As a result, the AI system learned to favor male applicants, even penalizing resumes that contained the word “women’s,” such as “women’s chess club captain.”

This case is a stark reminder of how AI systems, when trained on biased historical data, can perpetuate and even amplify existing societal biases. Instead of promoting fairer, more efficient hiring, Amazon’s tool reinforced gender imbalances in the tech sector. This example underscores the critical importance of ensuring that AI systems are trained on balanced, representative data and are regularly audited for fairness to prevent unintended bias from influencing important decisions, like hiring.

Bias in AI Image Generation Models

Addressing bias in AI systems can be challenging and nuanced, as illustrated by the early iterations of image generation models like DALL-E and Midjourney. These models faced criticism for producing stereotypical results when given neutral prompts. For example, when asked to generate an image of a doctor, the models predominantly produced images of men, while prompts for a nurse typically resulted in images of women. This highlighted the inherent biases the models had learned from their training data.

In an effort to correct this, Google’s Gemini AI took a different approach by generating more diverse images in response to prompts. However, this attempt raised another issue: in some cases, the generated images were seen as overly diverse, sparking debates about historical accuracy and realism. For instance, images meant to depict specific historical contexts featured a diversity that did not align with the actual demographics of the time. This led to questions about how to balance diversity with representation, especially when realism is expected. The controversy prompted Google to take the image generation feature offline, eventually replacing it with a new model six months later.

These incidents highlight the complexity of addressing bias in AI systems. They demonstrate that both under-representation and over-representation of diversity can cause problems, particularly in cases where historical accuracy or realism is anticipated. Finding the right balance is crucial to creating AI systems that are both fair and contextually appropriate.

Gender Bias in Google Job Ad Algorithms

Researchers from Carnegie Mellon University discovered significant gender bias in Google’s ad delivery algorithm. Their study used a tool called Ad Fisher to simulate job-seekers’ online behaviors, which were identical except for their listed gender. The findings revealed a stark contrast in how often high-paying executive job ads were shown: 1,852 times to male profiles versus only 318 times to female profiles.

This study underscores the challenges of algorithmic bias where even neutral algorithms can perpetuate societal biases, reflecting a cycle where biased input data results in biased outputs. Google’s response highlighted advertiser control over ad targeting but did not fully address the systemic issues raised by the findings.

This case exemplifies the need for more transparent AI systems and robust fairness audits to prevent discriminatory practices in algorithm-driven platforms, emphasizing the importance of ethical AI development to ensure equity in professional opportunities.

Practical Steps for Implementing Fairness in AI Development

Ensuring fairness in AI development requires a comprehensive approach that addresses potential biases at every stage. The following practical steps provide a roadmap for creating fair and equitable AI systems. By following these guidelines, developers can build AI systems that serve all users fairly and responsibly.

- Diverse Data Collection: Ensure your training data represents the population your AI will serve. This may involve gathering more data from underrepresented groups to fill gaps.

- Bias Audits: Regularly review your data and models to catch bias issues before they escalate.

- Fairness-Aware Machine Learning: Incorporate fairness into your model from the start, using techniques like adversarial debiasing. This involves training one model for the main task while another predicts a protected attribute (e.g., race or gender). By reducing the second model’s accuracy, bias in the primary model is minimized.

- Transparent Documentation: Maintain clear records of data sources, model architecture, and decision-making processes. Transparency is key to identifying and addressing biases.

- Diverse Teams: Create diverse teams that reflect the population your AI serves. Varied perspectives help catch biases that a homogeneous group might miss.

- Continuous Monitoring: Regularly monitor AI systems in production to ensure fairness as they handle new data and make decisions.

- Ethical Guidelines: Establish and adhere to well-defined ethical guidelines for AI development within your organization. These guidelines must explicitly focus on fairness and non-discrimination.

- Stakeholder Engagement: Engage with the communities impacted by your AI system. Their feedback is crucial for identifying potential issues and ensuring that your system meets their needs equitably.

Knowledge Check

Click the accordions below to expand the question and answer.

Question

Scenario: You are part of a team developing an AI model to assess loan applications. After reviewing the model’s performance, you notice that applicants from certain minority groups are being denied loans at a higher rate than other groups, even when their creditworthiness is similar. Your team suspects the model may be biased and needs to take steps to address this issue.

Question: What is the most appropriate next step to mitigate bias in your AI model?

a. Re-weight the data to give more importance to underrepresented groups and improve model fairness.

b. Remove demographic attributes from the dataset to prevent the model from learning biased patterns.

c. Adjust the output probabilities of the model using post-processing techniques to ensure fair outcomes.

d. Apply adversarial debiasing during model training to reduce the influence of protected attributes like race.

Answer

d. Apply adversarial debiasing during model training to reduce the influence of protected attributes like race.

Key Terms & Definitions

Hover over each card to flip it over and reveal its definition.

Adversarial Debiasing

An in-processing technique where two models are trained simultaneously: one performs the main task, while another attempts to predict a protected attribute (such as gender or race). The goal is to minimize the second model’s ability to predict the protected attribute, thereby reducing bias in the main task model.

Calibration

A post-processing method that adjusts the predicted probabilities of an AI model to account for bias. This ensures that predictions are more aligned with real-world outcomes, helping to balance performance across different demographic groups.

Counterfactual Fairness

Model’s prediction remains the same if a protected attribute is changed, keeping other attributes constant.

Demographic Parity

Model’s predictions are independent of protected attributes like gender or race, ensuring fairness across groups.

Equalized Odds

Requires equal true positive, false positive, and false negative rates across protected groups for fair outcomes.

Individual Fairness

Similar individuals should be treated similarly by the model, ensuring consistent treatment across cases.

Relabeling

A post-processing technique that involves changing the labels assigned by the model to ensure fairer outcomes. This is typically done when a model exhibits bias toward certain groups, and the labels are adjusted to equalize outcomes across these groups.

Resampling or Synthetic Data Generation (e.g., SMOTE)

A method used to balance imbalanced datasets by either duplicating underrepresented data points (resampling) or generating new synthetic data points (e.g., SMOTE – Synthetic Minority Over-sampling Technique). SMOTE generates new samples by interpolating between existing minority class examples to help the model learn from a more balanced dataset.

Re-weighting

A pre-processing technique that adjusts the importance of certain data points in the training set to account for underrepresented groups. This helps balance the model’s learning and reduces bias by giving more weight to data from marginalized or minority groups.

Further Learning

To deepen your understanding of the topics discussed in this lesson, we invite you to explore the following resources:

- Book: “Fairness and Machine Learning: Limitations and Opportunities” by Solon Barocas, Moritz Hardt, and Arvind Narayan.

- Book: “Unmasking AI: My Mission to Protect What Is Human in a World of Machines” by Joy Buolamwini.

- Academic Paper: “Fairness and Bias in Artificial Intelligence: A Brief Survey of Sources, Impacts, and Mitigation Strategies” by Emilio Ferrara (Sci, 2024)

- Open Source Toolkit: IBM’s AI Fairness 360

- Open Source Toolkit: Google’s What-If Tool (WIT)

- Article: “Sins of the machine: Fighting AI bias” by Michael Blanding (BerkeleyHaas Newsroom, October 24, 2023.