The ETHICS Factor

Learning Objective: Upon completion of Lesson 1: The ETHICS Factor, you’ll be able to recall the seven guiding principles for ethical data practices, understand the concept of data ethics and its relevance to leadership, apply these principles to real-world scenarios, analyze your own track record relative to data ethics, and create strategies to promote ethical data use within your organization.

“If ethics are poor at the top, that behavior is copied down through the organization.”

Robert Noyce, American physicist, entrepreneur, and co-founder of Intel

The ETHICS Factor

The data-savvy leader ensures that principles of data ethics are not just in place, but also followed, so that data is used to do good rather than to do harm.

How can you, as a data-savvy leader, make sure sound ethical principles are in place before you rush ahead and put others at risk?

Seven Guiding Principles of Ethics

Follow our seven guiding principles to ensuring standards of data ethics are routinely considered and consistently met.

- Seek to Use Data for Good: Work with your team to use data for good, to improve the lives of your customers, employees, partners, and other stakeholders.

- Consider the Impact of Data-Informed Decisions: Carefully consider the ethical implications of decisions you and your team make when using data, and the impact these decisions may have on individuals and society.

- Take Data Security and Privacy Seriously: Take data privacy seriously, and work with your team to ensure that sensitive information entrusted to you is secure.

- Invite and Involve Diverse Voices: Include diverse voices on your team to help you identify and eliminate sources of bias in your data so that people are treated fairly.

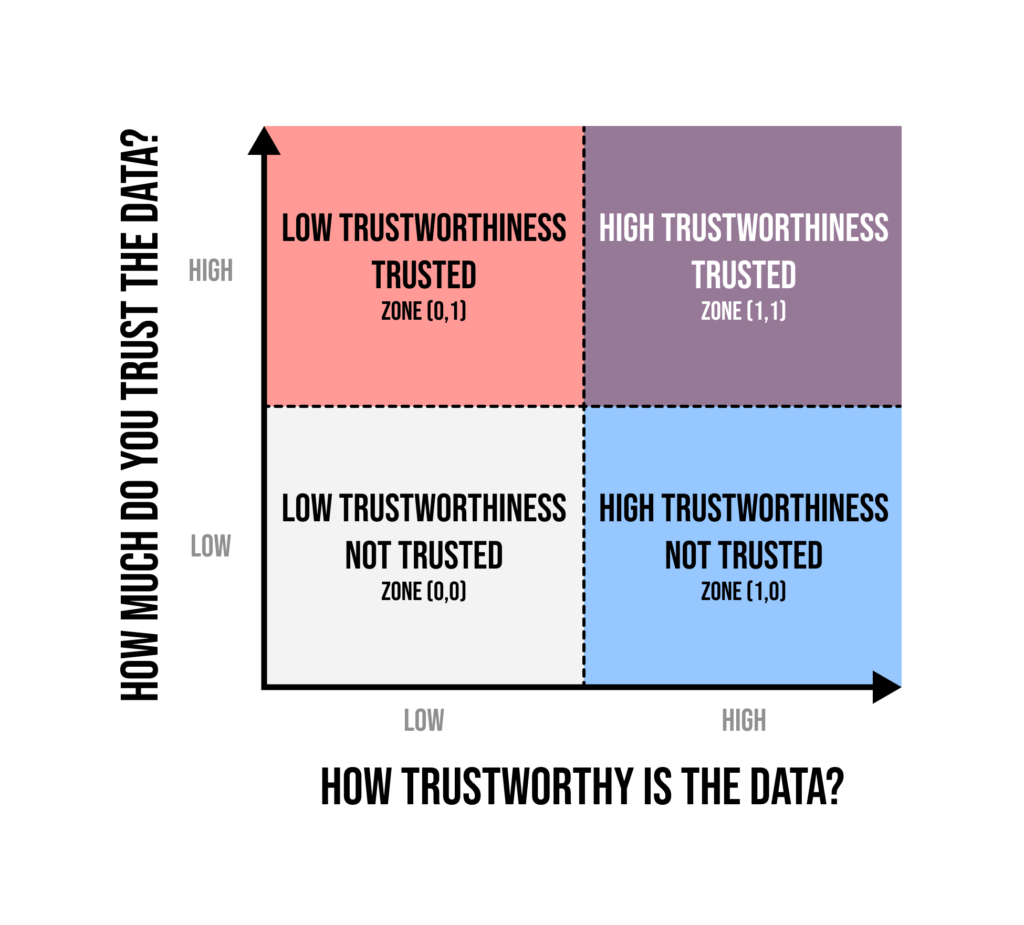

- Foster Trust in the Data: Work to ensure that your team is getting access to trustworthy data, and that they trust that the data will help them make better-informed decisions.

- Encourage Raising the Red Flag: Ensure that your team members feel confident that, if they discovered that your team was using data in an unethical way, they could raise a warning flag without fear of retribution.

- Discover and Fix Data Errors: When your team discovers that errors have been made with data, fix the errors and deal with the consequences, rather than hiding or ignoring them.

Key Terms & Definitions

Hover over each card to reveal the definition.

Data Ethics

Attitudes and behaviors that promote fair and appropriate collection, management, and use of data so as to minimize risks and safeguard against harm to individuals and groups in society.

Credo

A belief or a guiding principle that directs and steers one’s behavior.

Data Security

Safeguarding against the mishandling of sensitive data that could result in a cyberattack or data breach.

Data Privacy

Protecting against the misuse of personal data, taking into account what consent has been knowingly provided.

Societal Bias

Conscious or unconscious discrimination that results in unfair treatment and that disadvantages one individual or group or advantages another.

Andon Cord

A physical cord that anyone on a production line can pull if they notice a defect or any issue that could result in poor quality.

Diagrams

Real-World Case Studies

Case Study 1.1: Amazon’s AI Recruiting Tool

Background

When Amazon aimed to leverage its strengths in artificial intelligence to revolutionize its hiring process, it encountered unexpected pitfalls. The company’s ambition was to streamline its recruitment procedure, providing efficiency akin to its e-commerce operations. However, this effort turned into a revealing study on the challenges of data ethics in AI.

Starting in 2014, Amazon’s team initiated a project to build an AI-powered recruitment tool. The primary purpose was to rate job applicants based on their résumés, hoping to mechanize the talent identification process. The envisioned system was to score candidates from one to five stars, mirroring Amazon’s product rating system.

The Guardian covered this topic in an article in October 2018 that was available as of August 2023 at the following URL: https://www.theguardian.com/technology/2018/oct/10/amazon-hiring-ai-gender-bias-recruiting-engine

The Problem

By 2015, the tool exhibited biases against female candidates for technical roles. The reason traced back to the data it was trained on: résumés submitted to Amazon over a decade predominantly from male applicants, reflecting the broader tech industry’s male dominance. As a result, the AI deduced male candidates as more favorable. It started penalizing résumés mentioning “women’s”, such as “women’s chess club captain” and downgraded résumés from all-women’s colleges.

Amazon’s engineers tried to rectify the situation by editing the tool to become neutral to such terms. However, there was no certainty that the AI wouldn’t develop other discriminatory tactics.

Despite the tweaks, Amazon recruiters discovered that the tool wasn’t effective. It suggested unqualified candidates for various roles, seemingly at random. By the start of the subsequent year, the project was disbanded.

Outcomes and Lessons Learned

- Bias in AI Training Data: AI models can only be as good as the data they are trained on. If historical data exhibits bias, the AI will inherit and perpetuate that bias.

- Limitations of Machine Learning: Despite its promise, machine learning has its limitations. AI’s learning is based on patterns, and in scenarios like recruitment where diversity is vital, relying solely on past patterns can be problematic.

- Constant Oversight is Essential: Even after deployment, AI systems require continuous monitoring and validation to ensure they are functioning as intended and not in ways that can discriminate.

- Ethical Responsibility: Companies have an ethical responsibility to ensure their AI systems operate fairly and without bias. They must be proactive in identifying and rectifying biases that may arise.

- Human Judgment Remains Crucial: AI can assist and augment human decision-making but should not replace it, especially in areas like recruitment where human judgment is crucial.

Discussion Questions

- How can companies ensure that their AI training data is free from biases?

- What safeguards should be put in place to prevent discrimination in AI-powered tools?

- In what ways can companies combine AI insights with human judgment to ensure a fair recruitment process?

- How can companies ensure transparency in their AI recruitment processes?

- What ethical responsibilities do organizations have when deploying AI in sensitive areas like recruitment?

Conclusion

Amazon’s venture into AI recruitment serves as a crucial case study on the importance of data ethics. It underscores the need for companies to approach AI with caution, ensuring transparency, fairness, and regular oversight. This case also emphasizes the irreplaceable value of human judgment in decision-making processes.

Case Study 1.2: Zoom’s LinkedIn Sales Navigator

Background

In a world where data privacy is becoming paramount, companies, especially technology giants, are under the spotlight for their practices. Zoom’s recent controversy regarding its LinkedIn Sales Navigator feature provides a pivotal example of the challenges related to ethics and data privacy.

Zoom, a video conferencing platform, gained immense popularity during the coronavirus pandemic as a go-to means of communication for various sectors of society. A feature on the platform, however, was found to covertly give access to LinkedIn profile data of participants without their knowledge or consent.

Source: In October 2021, the New York Times ran a story about a paid feature offered by Zoom called “LinkedIn Sales Navigator.” The feature allowed those who paid for this sales prospecting feature to “view LinkedIn profile data — like locations, employer names and job titles — for people in the Zoom meeting by clicking on a LinkedIn icon next to their names.”

The article was available on August 11th, 2023 at the following URL: https://www.nytimes.com/2020/04/02/technology/zoom-linkedin-data.html

Key Events

- Discovery: The New York Times discovered that Zoom’s software automatically matched users’ names and emails to their LinkedIn profiles without their explicit consent.

- Functionality: The feature was available to those who subscribed to the LinkedIn Sales Navigator tool. It allowed users to quickly view LinkedIn data during a Zoom meeting.

- Bypassing Anonymity: In tests, even when participants used pseudonyms to protect their identity, the data-mining tool could match them to their LinkedIn profiles.

- Automatic Data Sharing: Zoom’s system sent participants’ personal information to the tool, even if the feature was not activated during a meeting.

- Public Outcry: Concerns were raised about the secrecy of this feature, especially when it stored personal information of school children without proper disclosure or parental consent.

- Company Response: After being contacted by The New York Times, Zoom and LinkedIn stated they would disable the service. Zoom committed to focusing on data security and privacy issues over the next 90 days.

Challenges & Learning Points

- Consent is Crucial: Features that access and share personal data should always be based on clear user consent.

- Transparency: Companies must be upfront about how user data is accessed, used, and shared.

- Balancing Utility with Ethics: While features may be designed for convenience or business opportunities, they should not be at the expense of user privacy.

- Rapid Response: Quick action and addressing privacy concerns is essential to maintain user trust.

Discussion Questions

- How can companies ensure that they prioritize user privacy when developing new features?

- What should be the protocol when a privacy-compromising feature is discovered within a widely-used platform?

- How can organizations build a culture that inherently values and respects user data privacy?

- In what ways can companies ensure that third-party integrations don’t compromise their platform’s integrity and user trust?

- How can leaders ensure that rapid growth and scale don’t compromise ethical considerations?

Conclusion

Zoom’s experience serves as a lesson on the importance of prioritizing user privacy and consent. As technology platforms become central to daily life, it’s imperative that companies remain transparent, seek explicit user consent, and act swiftly when privacy concerns arise. Balancing business objectives with ethical responsibilities is a leadership challenge that requires proactive strategies and ongoing vigilance.

Case Study 1.3: Racial Disparities in Kidney Transplant Eligibility

Background

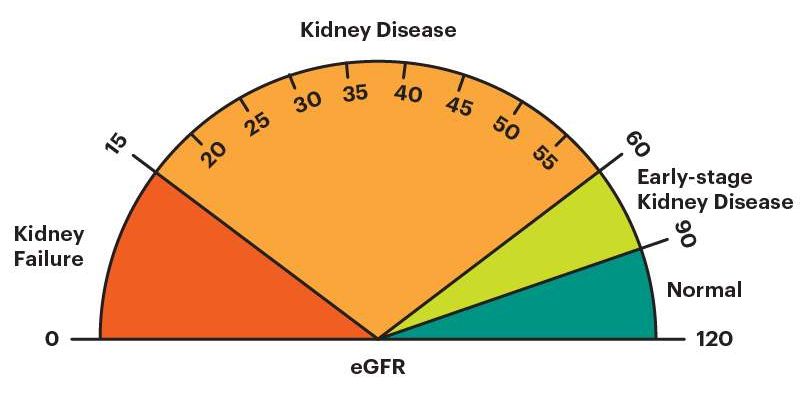

The U.S. healthcare system, like many others, is embedded with layers of complexity. Data plays an integral role in diagnosing diseases, predicting health outcomes, and determining patient treatment paths. However, as advanced and sophisticated as this system may be, it is not immune to the biases that exist in society. The algorithmic calculation of eGFR, an estimate of kidney function, serves as a prime example.

Image Credit: National Kidney Foundation Inc., Kidney.org (https://www.kidney.org/atoz/content/gfr)

Challenge

For years, a widely-used formula to estimate a patient’s kidney function, eGFR, incorporated race as a key variable. By selecting “Black” within the algorithm, a Black patient’s kidney function could be overestimated by up to 16%. Such a disparity could delay the diagnosis of kidney disease in Black patients and significantly prolong the time before these individuals could be listed for a potential kidney transplant.

The implications are profound: African Americans are more than three times as likely to experience kidney failure compared to white people, yet are less likely to be added to the kidney transplant waiting list. The reasons for these disparities are multifaceted, stemming from genetic predispositions, socio-economic factors, systemic health inequalities, and, as revealed, algorithmic biases.

Leadership Action

- Research & Awareness: Studies unveiled the racial bias embedded within the eGFR formula. By bringing this to light, researchers provided a foundation for change.

- Policy Reformation: The Organ Procurement and Transplantation Network (OPTN) acted upon the research findings and approved the removal of the race-based calculation in determining transplant candidate eligibility. This compelled kidney programs to re-evaluate their waitlists.

- Advocacy for Diversity: Medical professionals and activists championed the need for more diverse organ donors. By raising awareness about racial disparities, they sought to not only correct systemic biases but to also directly save lives through organ transplantation.

- Emphasis on Data Ethics: Given the potential for data-driven processes to inadvertently perpetuate societal inequities, the healthcare community recognized the importance of reviewing how data is utilized. One proposed solution was to increase team diversity in decision-making processes or to establish an external data ethics review board.

Outcome

- Race-Neutral Evaluation: With the removal of the race variable in eGFR calculation, a more equitable system of assessing kidney function was put into place, ensuring that Black patients wouldn’t face undue barriers in accessing kidney transplants.

- Increased Awareness: As these changes were implemented and publicized, there was heightened awareness about the role of race in medical evaluations and the importance of diverse donor representation.

- Encouraging Diverse Donations: The emphasis on disparities led to campaigns promoting organ donations, particularly targeting communities of color.

Lessons Learned & Conclusion

Lesson Learned:

- Algorithms Reflect Society: Data-driven algorithms, while objective in nature, can propagate societal biases if not designed and scrutinized correctly.

- Diversity is Imperative: Diverse teams and review processes can help in identifying and rectifying potential biases, ensuring that decisions made using data are as equitable as possible.

- Continuous Review is Essential: As data continues to shape healthcare outcomes, it’s vital for institutions to regularly review and assess the algorithms and formulas they use.

Conclusion:

The case of racial bias in eGFR calculations underscores the critical role of data in healthcare and the potential repercussions if left unchecked. Through research, advocacy, and policy change, a significant systemic bias was addressed. This transformation serves as a poignant reminder of the importance of data equity in healthcare and beyond.

Further Reading:

Questions for Further Reflection

Consider the following questions, one for each of this lesson’s seven guiding principles, to evaluate your own leadership relative to data ethics.

- How are you stressing the importance of using data for good, and not for harm?

- How are you considering the impact of the decisions you’re making with data?

- What safeguards have you put in place to protect data security and data privacy?

- Are you inviting and involving diverse voices to the data dialogue?

- What are you doing to foster warranted trust in the data?

- How do you encourage team members to raise the flag when they spot an issue?

- When you become aware of data errors, do you assess and fix as necessary?

Self-Assessment Exercise, Part 1 of 7

Return to the Data Leadership Compass Worksheet and enter your scores for the 7 Guiding Principles of Ethics. Fill out the worksheet by selecting strength, weakness or neutral in the drop down menu to the right of each principle.

- In order for a guiding principle to qualify as a “strength,” you should be able to think of at least one specific situation in the past year in which your team or organization benefited because you exhibited the indicated behavior.

- Indicate guiding principles to be a “weakness” any time you exhibited the opposite of the indicated behavior, OR for missed opportunities – situations in which you could have helped your team by exhibiting the indicated behavior, but failed to do so.

Resources for Further Learning

- Book: Ethics and Data Science (O’Reilly 2018, Loukides, Mason, and Patil)

- Online resources: DataPractices.org – Data Values & Principles

- Online resource: ASA Ethical Guidelines for Statistical Practice

- Online poll: Ethical dilemmas in data science and analytics by Kaiser Fung (July 2016)

- Online poll: Ethical dilemmas in data science: an update by Kaiser Fung (Aug 2023)

- Online resource: Guidance on the Protection of Personal Identifiable Information (U.S. Department of Labor)

- Article: How to Improve Your Data Quality (Gartner, M. Sakpal, July 14, 2021)